- 01.Background: Operational Challenges in Inference Services and GPU Resources

- 02.Why AMD Instinct™ GPUs: The Option of “Splitting” a GPU for Use

- 03.Our Approach: GPU Control and Application Deployment via the Orchestrator

- 04.Control Sequence: “Partition → Assign → Launch”

- 05.Advancing GPU Resource Allocation for Future Use

- Blog

- Wireless, Network, Computing

AMD InstinctTM GPU Architecture and Control Methods Optimized for AI Applications

#AI-RAN #AI #AMD #GPU

Feb 16, 2026

SoftBank Corp.

Topicsトピック

In this article, as part of a joint validation initiative launched by the SoftBank Research Institute of Advanced Technology (“RIAT”) in collaboration with Advanced Micro Devices, Inc. (“AMD”), we are optimizing GPU allocation to meet the specific requirements of AI applications. By leveraging the partitioning capabilities of AMD InstinctTM GPUs, we have extended our proprietary orchestrator to enable more effective GPU assignment for AI workloads.

1. Background: Operational Challenges in Inference Services and GPU Resources

As generative AI adoption accelerates, demand continues to grow for applications that use AI models such as large language models (LLMs). To meet this demand, RIAT has been developing next-generation AI infrastructure capable of flexibly controlling compute resources based on an AI application’s model size and runtime requirements. As part of this effort, we have been developing an orchestrator to manage compute resources and optimally allocate them to AI applications.

In inference services, GPU requirements constantly fluctuate depending on factors such as the size of the LLM, concurrency, use cases, and request volume. In addition, GPUs are also expensive compute resources, therefore service providers must operate by finite GPU resources across multiple use cases and multiple models.

If the granular allocation cannot be adjusted finely in response to demand fluctuations, resource mismatches become more likely—for example, assigning an unnecessarily large GPU to a small model. This can result in underutilized GPU capacity-commonly referred to as slack. Such slack is not merely “waste”; it can also make it difficult to provide flexible services as demand rises and falls. In practice, slack can prevent providers from securing sufficient capacity at the right time for larger models or for multiple smaller models, leading to request backlogs and startup delays.

For these reasons, it is crucial for service providers to allocate the exact amount of GPU resources needed, in line with AI application requirements such as model size and concurrency. If allocations can be optimized dynamically as demand changes, it becomes easier to reduce slack while avoiding resource contention—improving utilization, delivery efficiency, and overall service quality.

2. Why AMD InstinctTM GPUs: The Option of “Splitting” a GPU for Use

One approach to reducing slack and improving utilization and delivery efficiency, as discussed above, is to partition a GPU according to the workload’s needs.

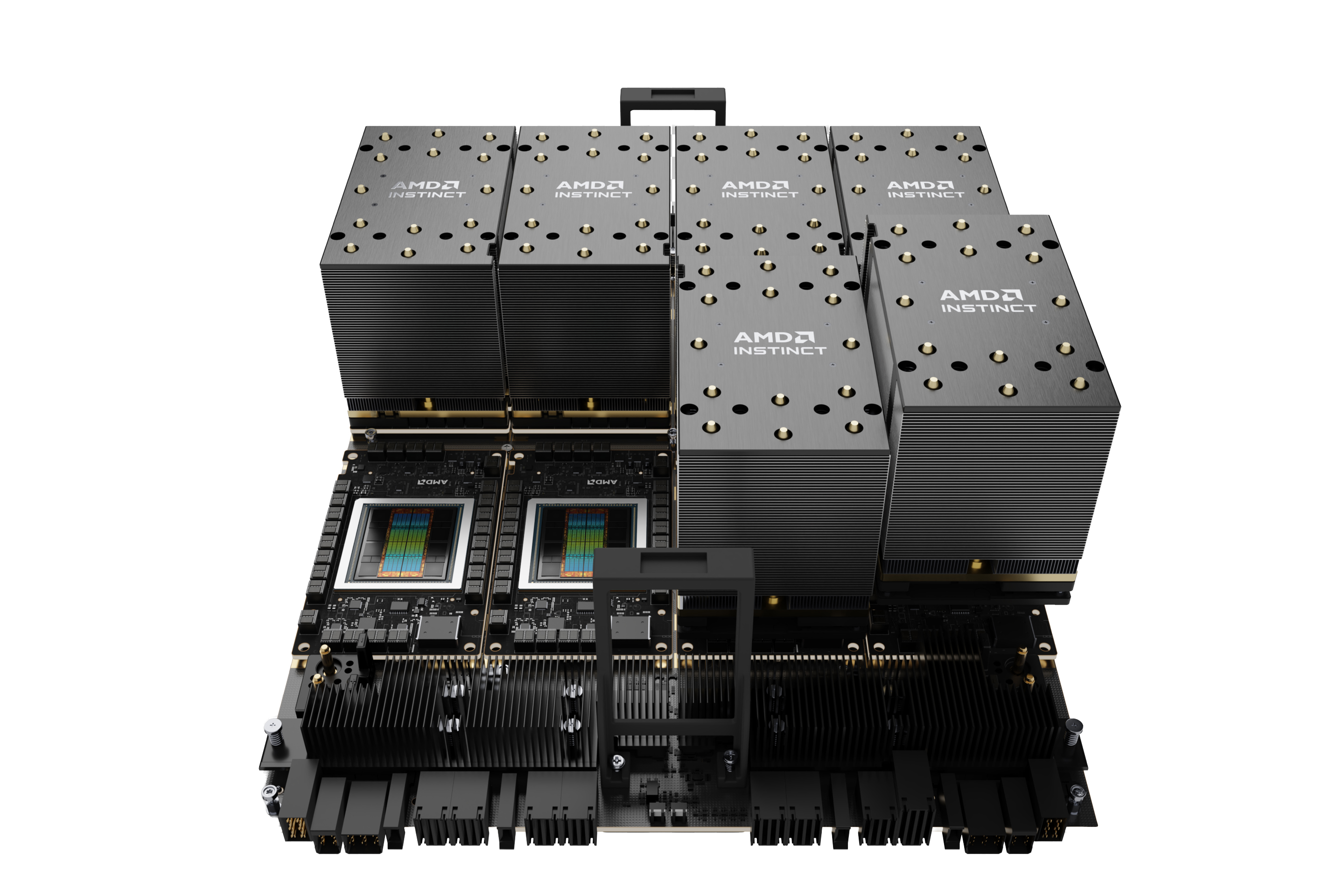

AMD InstinctTM GPUs feature high-capacity HBM* memory and provide a mechanism to divide GPU resources (GPU partitioning), allowing a single physical GPU to be partitioned into multiple logical devices as needed. This makes it possible to allocate “only what is required” to each inference service and to run multiple inference services in parallel on the same physical GPU, thereby reducing slack. As a result, limited GPU capacity can be utilized with finer granularity, expanding operational options even in environments with high demand variability.

*MI300X:HBM3 192GB, MI325X:HBM3e 256GB, MI355X/MI350X:HBM3e 288GB

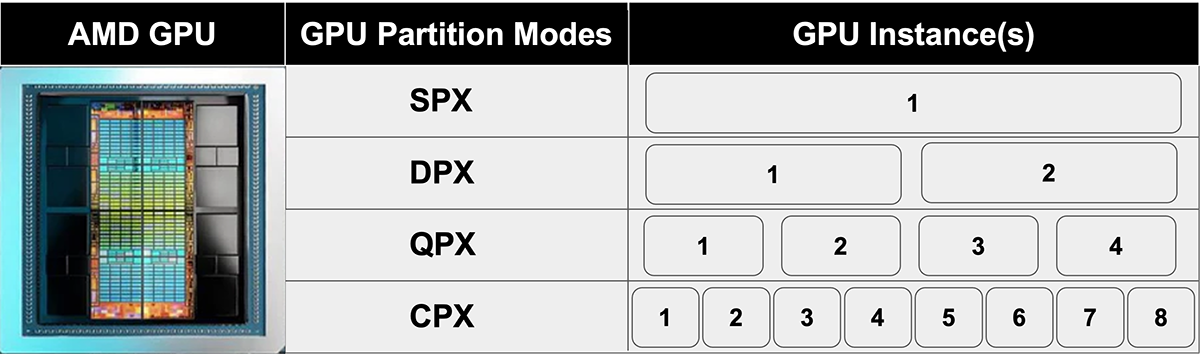

Figure 1. Compute Partitioning on AMD InstinctTM GPUs (created by the author based on multiple documents)

AMD InstinctTM GPUs define their logical configuration from two perspectives: compute resources and memory configuration. Concretely, they provide:

・Compute Partitioning (Figure 1), which uses the XCD (Accelerator Complex Die)—a unit of the GPU’s compute resources—as the partitioning unit.

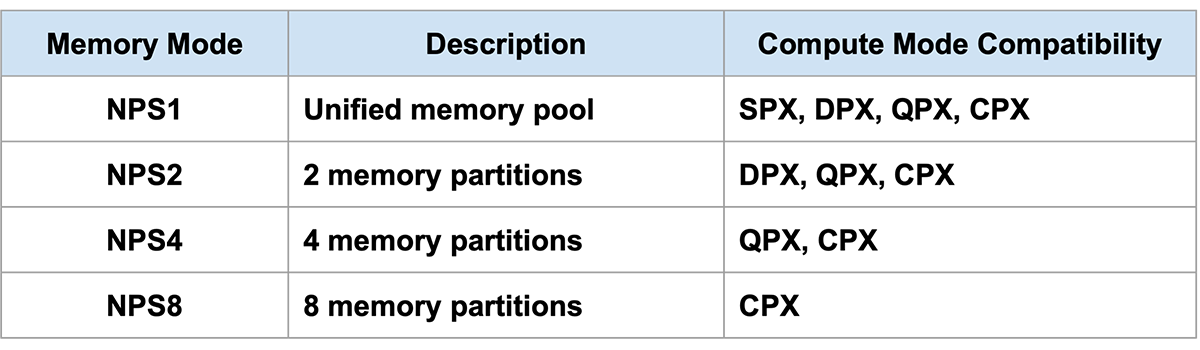

・Memory Partitioning (Table 1), which uses NPS (NUMA Per Socket)—a unit for controlling the memory configuration visible from a GPU Instance—as the partitioning unit.

In GPU partitioning, you configure partitions on both the compute side and the memory side, and by combining these settings you can use one physical GPU as multiple logical GPUs (GPU Instances).

Compute Partitioning supports SPX / DPX / QPX / CPX modes, which allow a single AMD InstinctTM GPU to be split into 1, 2, 4 or 8 GPU Instances, respectively.

Memory Partitioning (NPS) configures addressable HBM regions to manage the memory access of each GPU Instance. With supported modes including NPS1, NPS2, NPS4, and NPS8, these settings optimize memory placement and accelerate overall access speeds.

These two types of partitioning are configured based on predefined compatibility rules under the constraint that the number of memory partitions cannot exceed the number of compute partitions (see Table 1 below).

Table 1. Memory Partitioning on AMD InstinctTM GPUs (created by the author based on multiple documents)

By constructing GPU Instances through a combination of Compute Partitioning and Memory Partitioning, service providers can allocate the precise amount of GPU resources needed for specific model sizes and use cases, thereby improving operational efficiency.

Reference: AMD Partition overview

3. Our Approach: GPU Control and Application Deployment via the Orchestrator

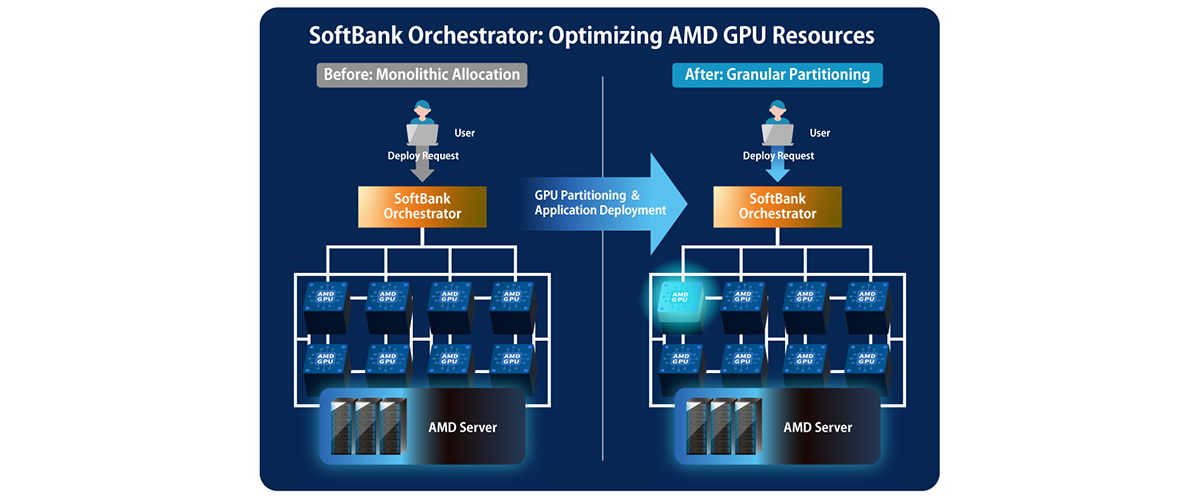

The orchestrator under development at RIAT receives deployment requests for AI applications and automates the process up to bringing up an inference service. In this initiative, we implemented a mechanism that combines the AMD InstinctTM GPU partitioning feature with our orchestrator so that GPU resources within a node can be dynamically partitioned and assigned based on the AI model’s requirements, and the inference service can then be started.

In this article, we focus on how GPU resources are partitioned and assigned within a single node. Note that the selection of the target node for placement is delegated to the existing scheduling mechanism.

Figure 2. GPU Allocation Flow for Deployment Requests

4. Control Sequence: “Partition → Assign → Launch”

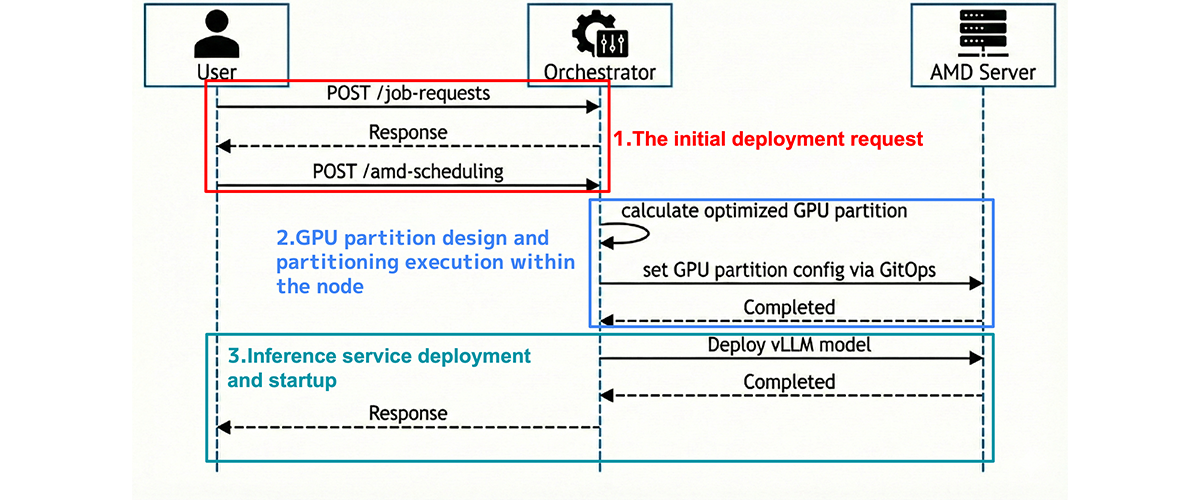

The following outlines the end-to-end workflow, from the initial deployment request to the launch of the inference service.

Figure 3. Sequence diagram of orchestrator control

1. The initial deployment request

An AI application submits the orchestrator a deployment request for an inference service using a specific AI model (in this validation, Llama-3.1-8B-Instruct).

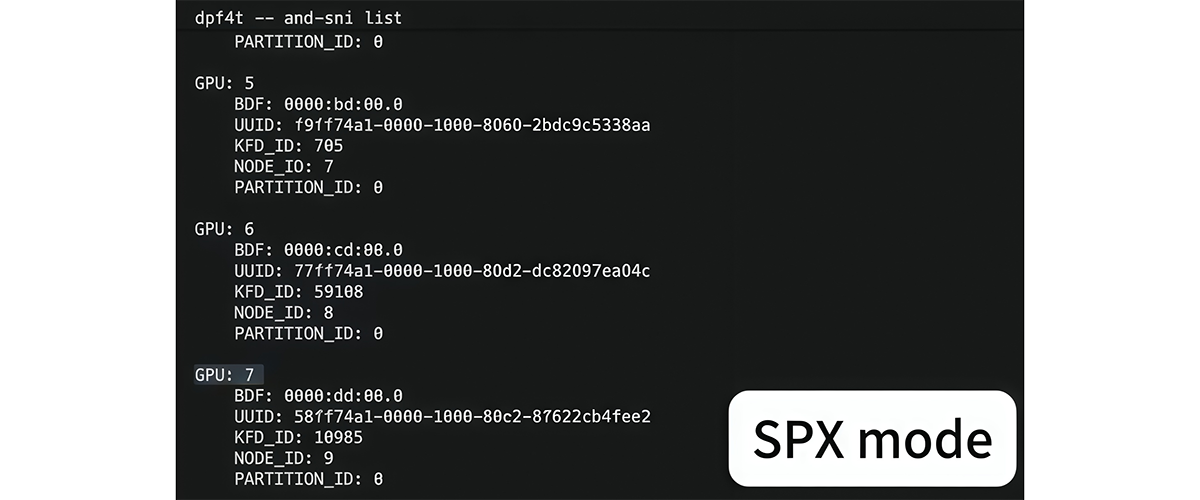

At this stage, all AMD InstinctTM GPUs on the server (node) are operating in SPX mode. As shown above, the ‘amd-smi list’ command recognizes eight instances, identified as GPU IDs 0–7.

2. GPU partition design and partitioning execution within the node

Based on the received model requirements, the orchestrator determines the required GPU resource size within the node. In this case, to enable allocation in smaller GPU units, GPU partitioning is applied.

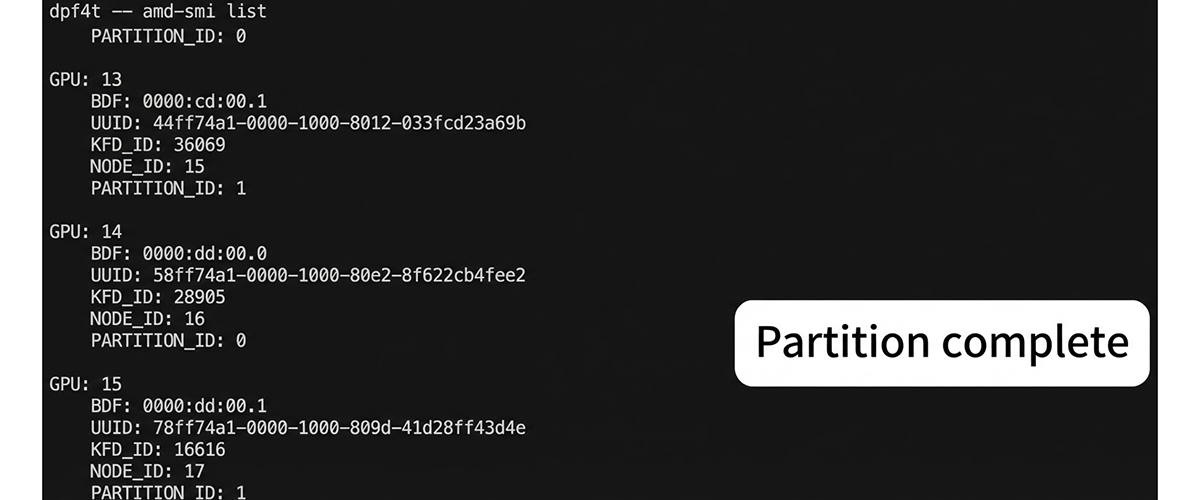

Under this configuration, all AMD InstinctTM GPUs in the node are logically partitioned into DPX mode, transitioning to a state where ‘amd-smi list’ recognizes 16 instances, identified as GPU IDs 0–15.

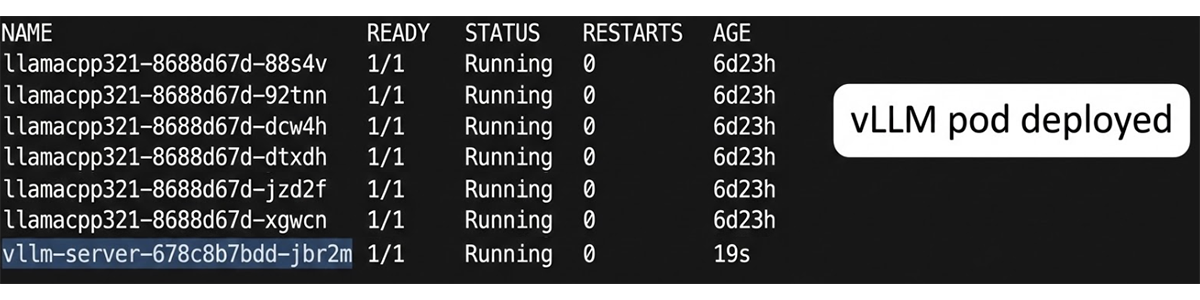

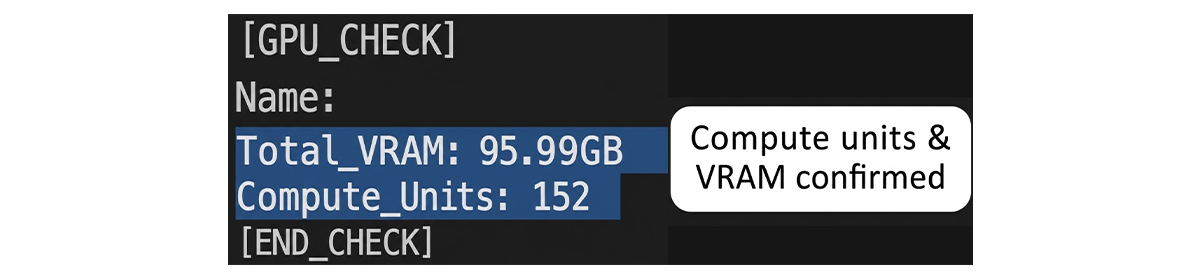

3. Inference service deployment and startup

Once GPU partitioning completes, the orchestrator assigns the necessary GPU Instances to the inference service and launches the inference container.

As a result, a vLLM Pod starts up, and we can confirm that the inference service is running with both compute (XCD) and memory (NPS) appropriately partitioned.

5. Advancing GPU Resource Allocation for Future Use

As generative AI usage expands and the requirements for AI applications—particularly inference—become increasingly diverse, RIAT continues its technical validation of next-generation AI infrastructure.

In inference services, compute and memory requirements fluctuate based on model size and concurrency, and workload variability. As a result, operational models that allocate GPUs in a fixed manner are prone to both slack and contention. To address this, RIAT collaborated with AMD to leverage the GPU partitioning capabilities of AMD InstinctTM GPUs. Through this initiative, we developed and validated a control mechanism that enables the orchestrator to partition GPUs based on requirements, assign them optimally, and automate the end-to-end “Partition → Assign → Launch” workflow.

Going forward, while anticipating broader adoption of small- and mid-sized models, we will further enhance optimal resource allocation by leveraging features such as GPU partitioning. In addition, we will explore flexible resource control strategies, aiming to extend this orchestrated optimization approach across a wider variety of AI accelerators in the future.