Policy

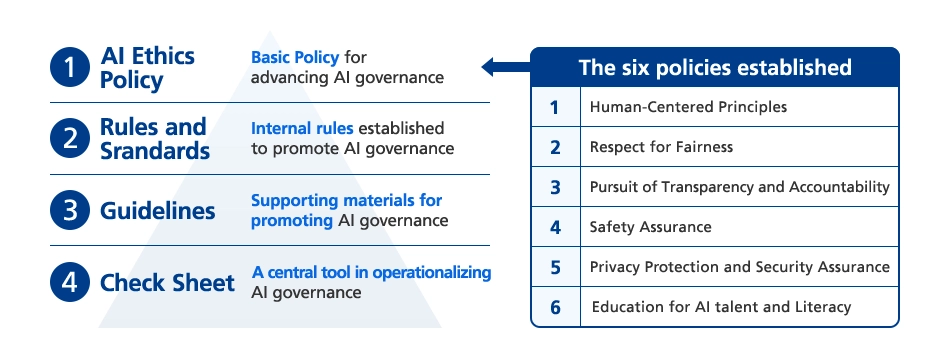

With careful consideration of the ethical risks and social impacts associated with the use of AI, we are committed to ensuring that AI is utilized correctly and responsibly for the well-being of all people. To guide this vision, we have established our conceptual framework—the AI Ethics Policy.

As a practical implementation of this policy, we have developed a range of supporting documents, including internal regulations, guidelines, and checklists. These resources are used across the organization to promote human-centric, fair, transparent, accountable, and other responsible AI practices. Through these efforts, we aim to ensure the proper use of technology in harmony with society and to realize AI that is trusted by all.

AI ethics and

governance framework

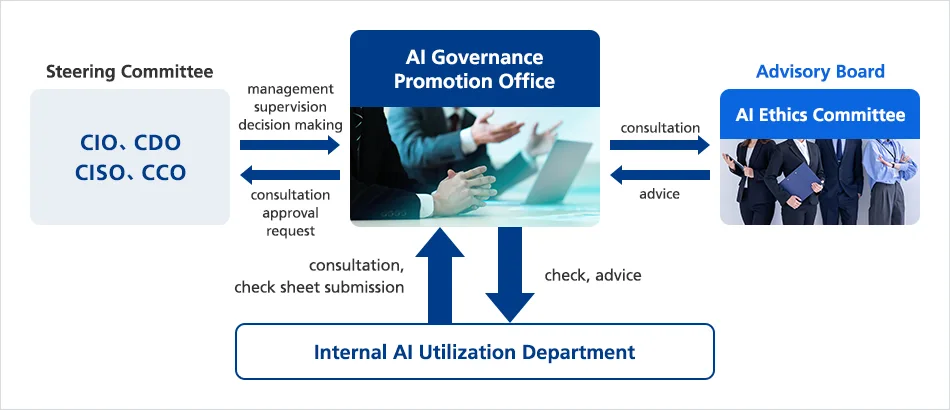

To comply with all relevant laws, guidelines, and social norms concerning the development and use of AI, and to ensure that AI is utilized appropriately for the well-being of people, we have established the AI Governance Promotion Office — an independent expert division dedicated to promoting governance across all internal AI-related departments.

Our Steering Committee is supported in its oversight role by key executives, including the Chief Information Officer (CIO), Chief Data Officer (CDO), Chief Information Security Officer (CISO), and Chief Compliance Officer (CCO). In addition, the AI Ethics Committee, composed of experts from both inside and outside the company, serves as an Advisory Board, providing guidance that contributes to the advancement of governance.

In cases where concerns arise regarding the inappropriate use of AI or its potential social impact, the Chairperson of the AI Ethics Committee collaborates with relevant department heads to promptly assess the situation, evaluate risks, and formulate and implement appropriate responses.

Moreover, in the event of a significant ethical or social incident involving AI, we will establish an Emergency Response Headquarters led by the President. This unit will coordinate with both internal and external stakeholders to ensure an effective response and, when necessary, report appropriately to relevant government ministries and agencies.

AI Ethics Committee

As the use of AI continues to expand across various industries, its applications are expected to grow in diversity and technical sophistication. At the same time, AI remains a technology that requires careful ethical consideration. Depending on how it is utilized, AI may lead to discriminatory evaluations or biased selection processes.

To address these concerns, we have established the AI Ethics Committee, chaired by Tadashi Iida, Senior Vice President and Chief Information Security Officer (CISO), who also oversees AI and cybersecurity. The committee includes participation from external experts and engages in discussions and proposals on a range of ethical issues related to AI. Our goal is to realize objective and effective AI governance from a user-oriented perspective.

The AI Ethics Committee is responsible for the following:

-

・

Sharing and proposing policies, initiatives, and plans related to AI ethics

-

・

Disseminating information that supports ethical AI practices

-

・

Reviewing internal AI usage and consultation cases, identifying ethical issues, and offering advice

Supporting AI ethics and

Governance Across Group Companies

We provide support for AI ethics and governance to our affiliated companies, including subsidiaries and related entities, with the aim of reducing and proactively preventing risks associated with the use of AI technologies. This includes promoting information sharing on appropriate risk countermeasures.

In addition, many of our affiliated companies have adopted the SoftBank AI Policy as a guiding framework to ensure appropriate and consistent use of AI in both application and research and development activities.

Initiatives

As we continue to strengthen our AI ethics and governance framework, we conduct ethical reviews, provide expert guidance, and carry out risk assessments based on a risk-based approach during the development and deployment of AI technologies within the company. To continuously monitor and evaluate AI usage and its social impact, we have established an AI Governance Monitoring Function, which facilitates close collaboration with both internal and external stakeholders. These activities are conducted in reference to domestic and international guidelines, enabling us to revise countermeasures and explore new initiatives as needed.

However, simply establishing rules is not sufficient to embed desirable behavior across the organization. For rules to have meaning, they must be fully understood by all stakeholders and naturally integrated into their daily work practices.

Therefore, in addition to developing robust governance rules, we place strong emphasis on education and training to communicate these principles clearly. We believe that only when each employee understands the context and intent behind the rules —and applies them in their own actions— can the governance truly function effectively.

Risk-based approach

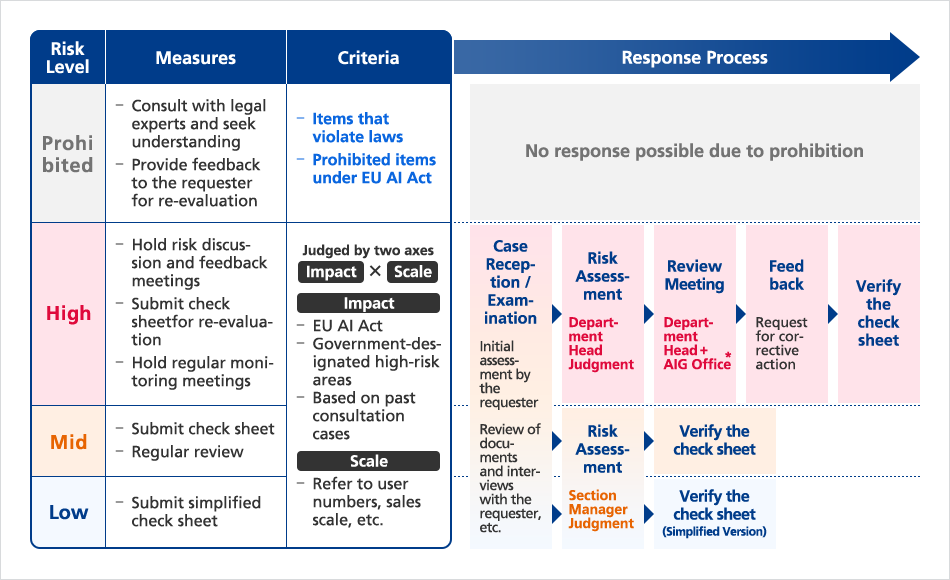

We have adopted a risk-based approach for conducting AI ethics and governance risk assessments. Risk levels are defined using a four-tier scale: Prohibited, High, Middle, and Low.

In defining these risk levels, we consider the potential social and business impact in the event that a risk materializes. At the same time, we ensure that the criteria are designed to be simple and clear, allowing employees to classify risks easily and consistently.

-

*

AI Governance Promotion Office

Education

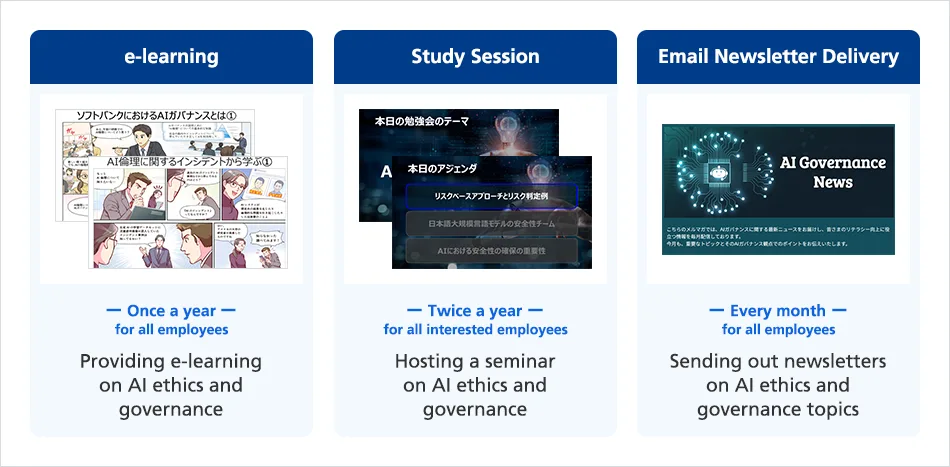

We place strong emphasis on promoting AI ethics and governance education to ensure that internal rules and knowledge related to AI are properly recognized, put into practice, and firmly embedded across the organization. As of FY2024, we have implemented a comprehensive education program that includes:

-

・

Annual e-learning sessions

-

・

Biannual study sessions

-

・

Monthly email newsletters

These initiatives are directed at all employees, including executives.

Our educational content focuses on enhancing AI literacy across the organization. Topics include:

-

・

Case studies of AI-related incidents occurring domestically and internationally

-

・

Key considerations when using AI —including generative AI— such as bias, data leakage, copyright infringement, and hallucination risks

-

・

Updates on social trends and developments related to AI ethics

Collaboration

with stakeholders

We regularly share the details of our initiatives with relevant government ministries and organizations. These efforts have been highly regarded and are featured in the AI Guidelines for Business*.

Going forward, we will continue to monitor developments in domestic and international regulations and guidelines, while pursuing practical and forward-thinking initiatives and ensuring their transparency through public disclosure.

- *